KANS3) kind 체험기

kind

지난번에 작성했던 docker in docker의 방식으로, docker container 안에 쿠버네티스 환경을 만들어주는 도구이다. 🔗 아래 실습 환경은 virtualbox에 우분투를 올려서 사용했는데, 이건 가시다님이 말들어둔 vagrant 파일을 사용했다. varantfile에서 provision시 참고하는 init_cfg.sh 파일에 기본적으로 kind, kubectl 등 툴 설치까지 포함되어있다.

설치 및 환경 확인

# Ubuntu 배포 관련 Vagrantfile, init_cfg.sh 파일 다운로드

❯ curl -O https://raw.githubusercontent.com/gasida/KANS/main/kind/Vagrantfile

❯ curl -O https://raw.githubusercontent.com/gasida/KANS/main/kind/init_cfg.sh

# vagrant 로 ubuntu 가상머신 1대 프로비저닝

❯ vagrant up

...

# vagrant 상태 확인 및 접속

❯ vagrant status

❯ vagrant ssh

# kind vm 내부

root@kind:~# whoami

root

root@kind:~# hostnamectl

Static hostname: kind

Icon name: computer-vm

Chassis: vm

Machine ID: 70eaa6d6c91f4656a250c177368ecc20

Boot ID: 2e90dc616d85469494b4a84bec8e0c02

Virtualization: oracle

Operating System: Ubuntu 22.04.4 LTS

Kernel: Linux 5.15.0-119-generic

Architecture: x86-64

Hardware Vendor: innotek GmbH

Hardware Model: VirtualBox

root@kind:~# ip -c a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: enp0s3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 02:c6:f7:1b:46:08 brd ff:ff:ff:ff:ff:ff

inet 10.0.2.15/24 metric 100 brd 10.0.2.255 scope global dynamic enp0s3

valid_lft 86227sec preferred_lft 86227sec

inet6 fe80::c6:f7ff:fe1b:4608/64 scope link

valid_lft forever preferred_lft forever

3: enp0s8: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 08:00:27:0f:e2:89 brd ff:ff:ff:ff:ff:ff

inet 192.168.50.10/24 brd 192.168.50.255 scope global enp0s8

valid_lft forever preferred_lft forever

inet6 fe80::a00:27ff:fe0f:e289/64 scope link

valid_lft forever preferred_lft forever

4: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 02:42:21:71:08:60 brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

첫번째 인터페이스 enp0s3은 nat모드로 구성되어있다. vm 내부에서 나올 시, 해당 인터페이스를 통해 host ip를 물고 나가게된다. 현재 이부분 설정에 ssh를 60000번 포트로 접근 할 수 있도록 포트포워딩 설정이 되어있는 상태이다.

두번째 인터페이스인 enp0s8은 호스트 전용 네트워크로 구성되어있다. vm끼리 혹은 host와 vm간의 통신 필요시 사용된다.

# host에서 telnet으로 확인해봤다

# enp0s3의 경우, 6000번으로 포트포워딩 했기에 127.0.0.2:6000

❯ telnet 127.0.0.1 60000

Trying 127.0.0.1...

Connected to localhost.

Escape character is '^]'.

SSH-2.0-OpenSSH_8.9p1 Ubuntu-3ubuntu0.10

# enp0s8의 경우, host와 vm간 nat없이 통신이 가능하기 때문에 vm ip:22로 확인 가능

❯ telnet 192.168.50.10 22

Trying 192.168.50.10...

Connected to nyoung.com.

Escape character is '^]'.

SSH-2.0-OpenSSH_8.9p1 Ubuntu-3ubuntu0.10

# enp0s3의 ip:22와 10.0.2.2:58115는 sshd: vagrant 프로세스로 연결된 것을 확인 할 수 있음

## nat모드의 경우 10.0.2.2는 gw ip

root@kind:~# netstat -napl | grep ssh

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 2137/sshd: /usr/sbi

tcp 0 0 10.0.2.15:22 10.0.2.2:58115 ESTABLISHED 4301/sshd: vagrant

tcp6 0 0 :::22 :::* LISTEN 2137/sshd: /usr/sbi

연결된 것이 192.168.50.10:22이 아닌 10.0.2.15:22이기 때문에, nat mode인 enp0s3의 포트포워딩을 통해 연결된 것을 확인 할 수 있다. ssh 연결 흐름을 보자면 아래와 같다. 127.0.0.1:60000 → 10.0.2.2:58115 → 10.0.2.15:22

kind cluster 생성 및 환경 확인

network

# cluster 생성 전

root@kind:~# docker network ls

NETWORK ID NAME DRIVER SCOPE

c4a3e6bcfdff bridge bridge local

36a9773d81fa host host local

aacb4af19c13 none null local

# cluster 생성

root@kind:~# cat << EOT > kind-2node.yaml

# two node (one workers) cluster config

kind: Cluster

apiVersion: kind.x-k8s.io/v1alpha4

nodes:

- role: control-plane

- role: worker

EOT

root@kind:~# kind create cluster --config kind-2node.yaml --name myk8s

Creating cluster "myk8s" ...

✓ Ensuring node image (kindest/node:v1.31.0) 🖼

✓ Preparing nodes 📦 📦

✓ Writing configuration 📜

✓ Starting control-plane 🕹️

✓ Installing CNI 🔌

✓ Installing StorageClass 💾

✓ Joining worker nodes 🚜

Set kubectl context to "kind-myk8s"

You can now use your cluster with:

kubectl cluster-info --context kind-myk8s

Have a nice day! 👋

# cluster 확인

(⎈|kind-myk8s:N/A) root@kind:~# kind get nodes --name myk8s

myk8s-worker

myk8s-control-plane

(⎈|kind-myk8s:N/A) root@kind:~# kubectl cluster-info

Kubernetes control plane is running at https://127.0.0.1:33455

CoreDNS is running at https://127.0.0.1:33455/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy

# 컨테이너를 확인해보면, 각 컨테이너의 이름이 컨프롤플레인, 워커노드로 되어있다.

(⎈|kind-myk8s:N/A) root@kind:~# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

bc4534e3ecac kindest/node:v1.31.0 "/usr/local/bin/entr…" 2 minutes ago Up 2 minutes myk8s-worker

5891b81cfe35 kindest/node:v1.31.0 "/usr/local/bin/entr…" 2 minutes ago Up 2 minutes 127.0.0.1:33455->6443/tcp myk8s-control-plane

# 컨프롤플레인 컨테이너의 6443 포트가 어떤 프로세스로 쓰이는지 확인해보면, kube-apiserver로 나온다.

(⎈|kind-myk8s:N/A) root@kind:~# docker exec -it myk8s-control-plane ss -tnlp | grep 6443

LISTEN 0 4096 *:6443 *:* users:(("kube-apiserver",pid=575,fd=3))

# 컨프롤플레인 컨테이너에서 돌고 있는 kube-apiserver 프로세스는 하나의 파드로 동작 중임

(⎈|kind-myk8s:N/A) root@kind:~# kubectl get pod -n kube-system -l component=kube-apiserver -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-apiserver-myk8s-control-plane 1/1 Running 0 2m38s 172.18.0.2 myk8s-control-plane <none> <none>

# 맨처음 확인했던 docker network ls를 다시 확인하면, network name에 kind가 생겨있다.

(⎈|kind-myk8s:N/A) root@kind:~# docker network ls

NETWORK ID NAME DRIVER SCOPE

c4a3e6bcfdff bridge bridge local

36a9773d81fa host host local

9fb3efb629ed kind bridge local

aacb4af19c13 none null local

# kind에서 사용하는 대역대: 172.18.0.0/16

(⎈|kind-myk8s:N/A) root@kind:~# docker inspect kind | jq

[

{

"Name": "kind",

"Id": "9fb3efb629ede680c24bdf527c5c221b18c69f8ea2929dbdfdc242e2ec344917",

"Created": "2024-09-08T00:27:02.831474838+09:00",

"Scope": "local",

"Driver": "bridge",

"EnableIPv6": true,

"IPAM": {

"Driver": "default",

"Options": {},

"Config": [

{

"Subnet": "fc00:f853:ccd:e793::/64"

},

{

"Subnet": "172.18.0.0/16",

"Gateway": "172.18.0.1"

}

]

...

]

# 클러스터 설치 후 추가된 kind 인터페이스

(⎈|kind-myk8s:N/A) root@kind:~# ip -c a

...

5: br-9fb3efb629ed: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default

link/ether 02:42:af:1a:63:36 brd ff:ff:ff:ff:ff:ff

inet 172.18.0.1/16 brd 172.18.255.255 scope global br-9fb3efb629ed

...

kubectl 명령어를 통한 확인

# cri: containerd

(⎈|kind-myk8s:N/A) root@kind:~# kubectl get node -owide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

myk8s-control-plane Ready control-plane 19m v1.31.0 172.18.0.2 <none> Debian GNU/Linux 12 (bookworm) 5.15.0-119-generic containerd://1.7.18

myk8s-worker Ready <none> 19m v1.31.0 172.18.0.3 <none> Debian GNU/Linux 12 (bookworm) 5.15.0-119-generic containerd://1.7.18

# cni: kindnet

(⎈|kind-myk8s:N/A) root@kind:~# kubectl get pod -A -owide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

kube-system coredns-6f6b679f8f-s9czc 1/1 Running 0 20m 10.244.0.4 myk8s-control-plane <none> <none>

kube-system coredns-6f6b679f8f-vh5fb 1/1 Running 0 20m 10.244.0.3 myk8s-control-plane <none> <none>

kube-system etcd-myk8s-control-plane 1/1 Running 0 20m 172.18.0.2 myk8s-control-plane <none> <none>

kube-system kindnet-8x77g 1/1 Running 0 19m 172.18.0.3 myk8s-worker <none> <none>

kube-system kindnet-v6rwq 1/1 Running 0 20m 172.18.0.2 myk8s-control-plane <none> <none>

kube-system kube-apiserver-myk8s-control-plane 1/1 Running 0 20m 172.18.0.2 myk8s-control-plane <none> <none>

kube-system kube-controller-manager-myk8s-control-plane 1/1 Running 0 20m 172.18.0.2 myk8s-control-plane <none> <none>

kube-system kube-proxy-l97cd 1/1 Running 0 20m 172.18.0.2 myk8s-control-plane <none> <none>

kube-system kube-proxy-tvqp6 1/1 Running 0 19m 172.18.0.3 myk8s-worker <none> <none>

kube-system kube-scheduler-myk8s-control-plane 1/1 Running 0 20m 172.18.0.2 myk8s-control-plane <none> <none>

local-path-storage local-path-provisioner-57c5987fd4-j65ll 1/1 Running 0 20m 10.244.0.2 myk8s-control-plane <none> <none>

# local-path-storage는 볼륨을 관리한다.

# local-path라는 storageClass가 기본으로 설치되어, 노드의 로컬 저장소를 활용하게된다.

(⎈|kind-myk8s:N/A) root@kind:~# kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

standard (default) rancher.io/local-path Delete WaitForFirstConsumer false 25m

(⎈|kind-myk8s:N/A) root@kind:~# kubectl get deploy -n local-path-storage

NAME READY UP-TO-DATE AVAILABLE AGE

local-path-provisioner 1/1 1 1 25m

컨테이너 접근을 통한 확인

(⎈|kind-myk8s:N/A) root@kind:~# docker exec -it myk8s-control-plane tree /etc/kubernetes/manifests/

/etc/kubernetes/manifests/

|-- etcd.yaml

|-- kube-apiserver.yaml

|-- kube-controller-manager.yaml

`-- kube-scheduler.yaml

1 directory, 4 files

# 컨프롤플레인 컨테이너 접속

(⎈|kind-myk8s:N/A) root@kind:~# docker exec -it myk8s-control-plane bash

root@myk8s-control-plane:/# systemctl status kubelet

● kubelet.service - kubelet: The Kubernetes Node Agent

Loaded: loaded (/etc/systemd/system/kubelet.service; enabled; preset: enabled)

Drop-In: /etc/systemd/system/kubelet.service.d

└─10-kubeadm.conf, 11-kind.conf

Active: active (running) since Sat 2024-09-07 15:27:31 UTC; 3h 27min ago

...

root@myk8s-control-plane:/# systemctl status containerd

● containerd.service - containerd container runtime

Loaded: loaded (/etc/systemd/system/containerd.service; enabled; preset: enabled)

Active: active (running) since Sat 2024-09-07 15:27:12 UTC; 3h 36min ago

...

# 노드 내의 컨테이너를 확인하려면, docker가 아닌 crictl 을 사용해야한다.

root@myk8s-control-plane:/# docker ps

bash: docker: command not found

# 확인해보면, 노드 컨테이너 밖에서 봤던 $docker ps에서는 확인하지 못한 컨테이너들을 볼 수 있다.

root@myk8s-control-plane:/# crictl ps

CONTAINER IMAGE CREATED STATE NAME ATTEMPT POD ID POD

afe2d8015c2e3 cbb01a7bd410d 3 hours ago Running coredns 0 21d473a40b515 coredns-6f6b679f8f-vh5fb

b76926dc718ff cbb01a7bd410d 3 hours ago Running coredns 0 f1754c08ce8d1 coredns-6f6b679f8f-s9czc

7887c1f9ae9b0 3a195b56ff154 3 hours ago Running local-path-provisioner 0 d125d965756df local-path-provisioner-57c5987fd4-j65ll

3f8ac2aaefdaf 12968670680f4 3 hours ago Running kindnet-cni 0 25cd30c024e19 kindnet-v6rwq

43e8e4fd649ff af3ec60a3d89b 3 hours ago Running kube-proxy 0 691137d82fefc kube-proxy-l97cd

f82ebfc4c13d5 2e96e5913fc06 3 hours ago Running etcd 0 e9e4e10d71678 etcd-myk8s-control-plane

80597733e1b9e 7e9a7dc204d9d 3 hours ago Running kube-controller-manager 0 4cfbd9fdffbd2 kube-controller-manager-myk8s-control-plane

51c5a1b80615e 4f8c99889f8e4 3 hours ago Running kube-apiserver 0 c3b19bed36904 kube-apiserver-myk8s-control-plane

c827d2bd0516d 418e326664bd2 3 hours ago Running kube-scheduler 0 e6aab7c36a0f5 kube-scheduler-myk8s-control-plane

# 각각의 컨테이너인 프로세스들

(⎈|kind-myk8s:N/A) root@kind:~# docker exec -it myk8s-control-plane bash

root@myk8s-control-plane:/# pstree

systemd-+-containerd---19*[{containerd}]

|-containerd-shim-+-kube-apiserver---11*[{kube-apiserver}]

| |-pause

| `-12*[{containerd-shim}]

|-containerd-shim-+-etcd---10*[{etcd}]

| |-pause

| `-12*[{containerd-shim}]

|-containerd-shim-+-kube-controller---6*[{kube-controller}]

| |-pause

| `-12*[{containerd-shim}]

|-containerd-shim-+-kube-scheduler---9*[{kube-scheduler}]

| |-pause

| `-12*[{containerd-shim}]

|-containerd-shim-+-kube-proxy---6*[{kube-proxy}]

| |-pause

| `-12*[{containerd-shim}]

|-containerd-shim-+-kindnetd---9*[{kindnetd}]

| |-pause

| `-12*[{containerd-shim}]

|-containerd-shim-+-local-path-prov---9*[{local-path-prov}]

| |-pause

| `-12*[{containerd-shim}]

|-containerd-shim-+-coredns---9*[{coredns}]

| |-pause

| `-12*[{containerd-shim}]

|-containerd-shim-+-coredns---10*[{coredns}]

| |-pause

| `-12*[{containerd-shim}]

|-kubelet---13*[{kubelet}]

`-systemd-journal

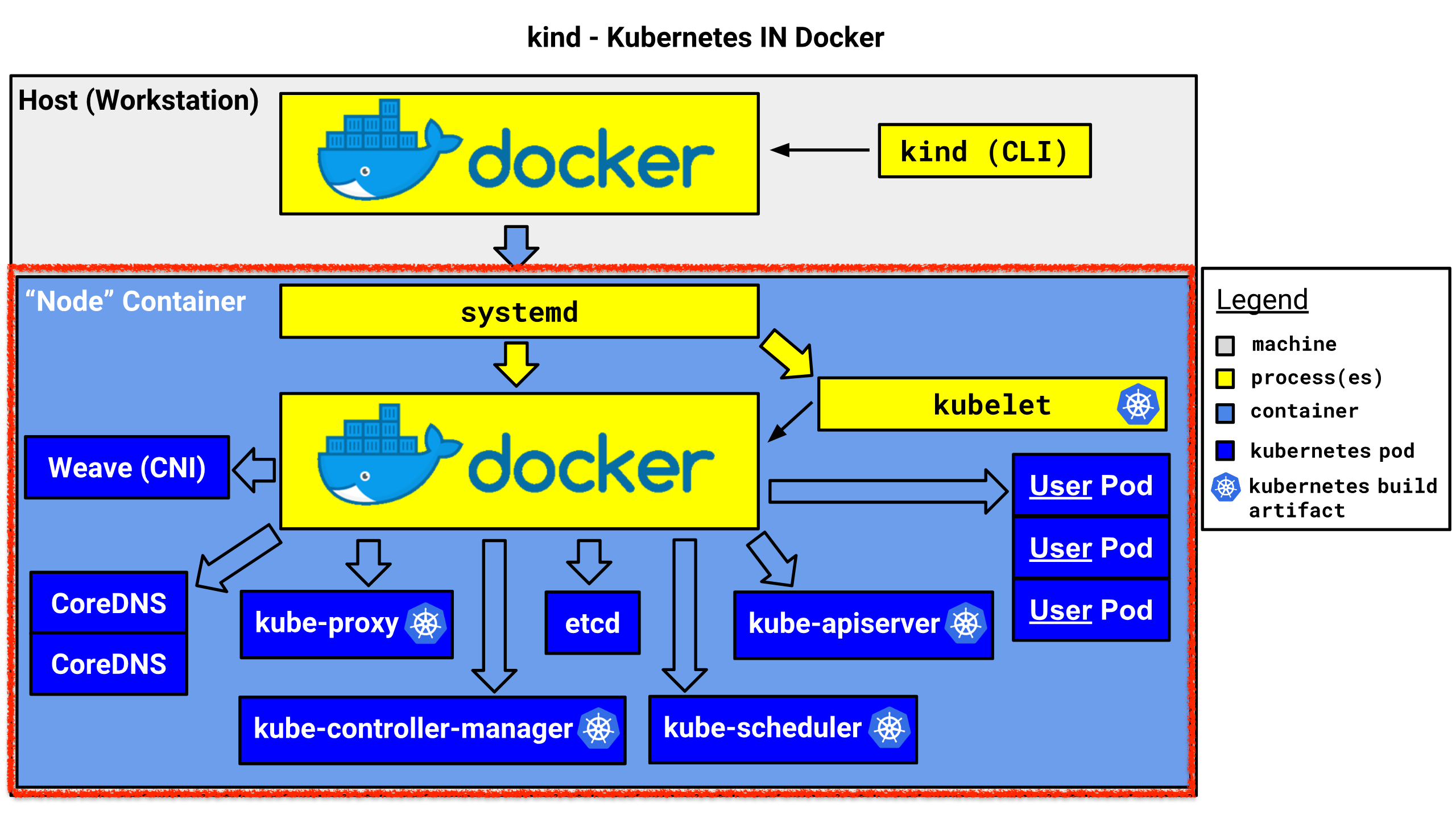

kind 명령어를 통해 손쉽게 노드 컨테이너 내에 클러스터를 구성할 수 있다. 아래 이미지와 같이 노드 컨테이너 내에 쿠버네티스 컴포넌트들이 컨테이너로 올라와있는데, 이걸 kind 명령어 한방으로 다 해결 가능하다.

- Host(Workstation) = 버추얼박스의 VM

- Node Container = 컨트롤플레인 컨테이너

multi node cluster(Control-plane, Nodes)

# 클러스터 생성시 반영이 필요한 port부분을 업데이트하여 새로 생성

(⎈|kind-myk8s:N/A) root@kind:~# cat <<EOT> kind-2node.yaml

# two node (one workers) cluster config

kind: Cluster

apiVersion: kind.x-k8s.io/v1alpha4

nodes:

- role: control-plane

- role: worker

extraPortMappings:

- containerPort: 30000

hostPort: 30000

listenAddress: "0.0.0.0" # Optional, defaults to "0.0.0.0"

protocol: tcp # Optional, defaults to tcp

- containerPort: 30001

hostPort: 30001

EOT

(⎈|kind-myk8s:N/A) root@kind:~# kind create cluster --config kind-2node.yaml --name myk8s

Creating cluster "myk8s" ...

✓ Ensuring node image (kindest/node:v1.31.0) 🖼

✓ Preparing nodes 📦 📦

✓ Writing configuration 📜

✓ Starting control-plane 🕹️

✓ Installing CNI 🔌

✓ Installing StorageClass 💾

✓ Joining worker nodes 🚜

...

(⎈|kind-myk8s:N/A) root@kind:~# kind get clusters

myk8s

(⎈|kind-myk8s:N/A) root@kind:~# kind get nodes --name myk8s

myk8s-control-plane

myk8s-worker

# 노드 확인

(⎈|kind-myk8s:N/A) root@kind:~# kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

myk8s-control-plane Ready control-plane 3m46s v1.31.0 172.18.0.2 <none> Debian GNU/Linux 12 (bookworm) 5.15.0-119-generic containerd://1.7.18

myk8s-worker Ready <none> 3m31s v1.31.0 172.18.0.3 <none> Debian GNU/Linux 12 (bookworm) 5.15.0-119-generic containerd://1.7.18

# 컨테이너인 노드 확인

(⎈|kind-myk8s:N/A) root@kind:~# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

9eeb73110096 kindest/node:v1.31.0 "/usr/local/bin/entr…" 39 minutes ago Up 38 minutes 127.0.0.1:33763->6443/tcp myk8s-control-plane

2ef49f5b3f11 kindest/node:v1.31.0 "/usr/local/bin/entr…" 39 minutes ago Up 38 minutes 0.0.0.0:30000-30001->30000-30001/tcp myk8s-worker

# docker ps 결과의 PORTS 에서 본 것과 같이 host_ip:30000 -> 워커 노드 컨테이너:30000로 포트포워딩된다.

(⎈|kind-myk8s:N/A) root@kind:~# docker port myk8s-worker

30000/tcp -> 0.0.0.0:30000

30001/tcp -> 0.0.0.0:30001

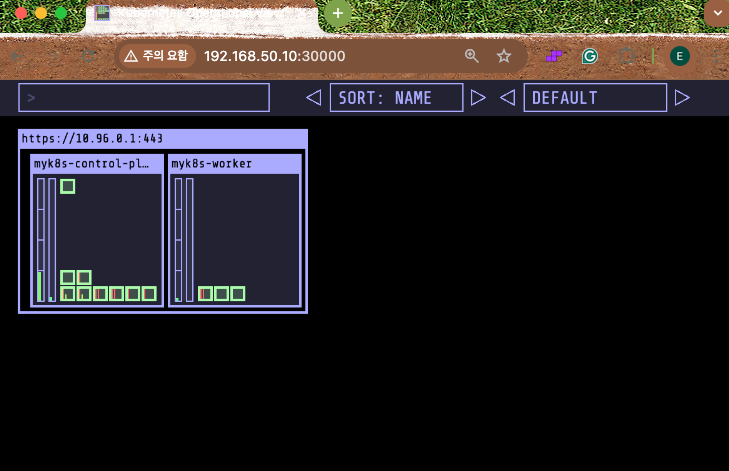

# 30000 port가 노드 포트로 svc 생성되는 kube-ops-vie 설치

(⎈|kind-myk8s:N/A) root@kind:~# helm repo add geek-cookbook https://geek-cookbook.github.io/charts/

(⎈|kind-myk8s:N/A) root@kind:~# helm install kube-ops-view geek-cookbook/kube-ops-view --version 1.2.2 --set service.main.type=NodePort,service.main.ports.http.nodePort=30000 --set env.TZ="Asia/Seoul" --namespace kube-system

도커 이미지 빌드

# index.html 파일

echo "Hello TestPage" > index.html

# Dockerfile 파일

cat << EOF > Dockerfile

FROM nginx:alpine

COPY index.html /usr/share/nginx/html/

EXPOSE 80

CMD ["nginx", "-g", "daemon off;"]

EOF

# 이미지 빌드

(⎈|kind-myk8s:N/A) root@kind:~# docker build -t nyoung-web:1.0 .

[+] Building 6.1s (7/7) FINISHED

(⎈|kind-myk8s:N/A) root@kind:~# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

nyoung-web 1.0 2ff91a6a9931 29 seconds ago 43.2MB

kindest/node <none> b5cb8c3b1441 3 weeks ago 1.03GB

# 현재 이미지 검색 결과 nyoung을 가진 이미지는 없음

(⎈|kind-myk8s:N/A) root@kind:~# docker exec -it myk8s-control-plane crictl images | grep nyoung

(⎈|kind-myk8s:N/A) root@kind:~# docker exec -it myk8s-worker crictl images | grep nyoung

# 도커 이미지를 kind로 load

(⎈|kind-myk8s:N/A) root@kind:~# kind load docker-image nyoung-web:1.0 --name myk8s

Image: "nyoung-web:1.0" with ID "sha256:2ff91a6a99314a4d6af2654795f7a9c85f18f6aa562b55927571f011335b0d1e" not yet present on node "myk8s-control-plane", loading...

Image: "nyoung-web:1.0" with ID "sha256:2ff91a6a99314a4d6af2654795f7a9c85f18f6aa562b55927571f011335b0d1e" not yet present on node "myk8s-worker", loading...

# 재검색시 확인 가능

(⎈|kind-myk8s:N/A) root@kind:~# docker exec -it myk8s-control-plane crictl images | grep nyoung

docker.io/library/nyoung-web 1.0 2ff91a6a99314 44.6MB

(⎈|kind-myk8s:N/A) root@kind:~# docker exec -it myk8s-worker crictl images | grep nyoung

docker.io/library/nyoung-web 1.0 2ff91a6a99314 44.6MB

# 로드된 이미지를 사용하여 deployment 생성

(⎈|kind-myk8s:N/A) root@kind:~# kubectl create deployment deploy-myweb --image=nyoung-web:1.0

deployment.apps/deploy-myweb created

(⎈|kind-myk8s:N/A) root@kind:~# kubectl get deploy,pod

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/deploy-myweb 1/1 1 1 8s

NAME READY STATUS RESTARTS AGE

pod/deploy-myweb-7648bb6c46-dljxz 1/1 Running 0 8s

# 노드 포트를 사용한 서비스 배포

cat <<EOF | kubectl create -f -

apiVersion: v1

kind: Service

metadata:

name: deploy-myweb

spec:

type: NodePort

ports:

- name: svc-mywebport

nodePort: 30001

port: 80

selector:

app: deploy-myweb

EOF

(⎈|kind-myk8s:N/A) root@kind:~# kubectl get svc,ep deploy-myweb

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/deploy-myweb NodePort 10.96.18.139 <none> 80:31001/TCP 34s

NAME ENDPOINTS AGE

endpoints/deploy-myweb 10.244.1.3:80 34s

(⎈|kind-myk8s:N/A) root@kind:~# kubectl get po -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

deploy-myweb-7648bb6c46-dljxz 1/1 Running 0 6m1s 10.244.1.3 myk8s-worker <none> <none>

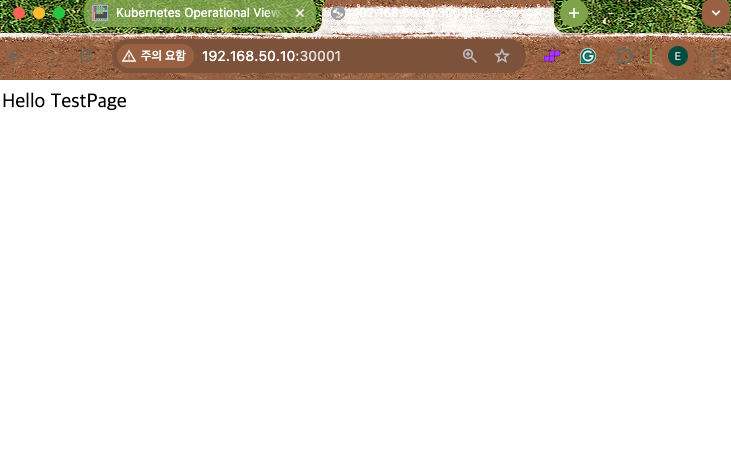

# Host에서 실행

❯ curl 192.168.50.10:30001

Hello TestPage

ingress

# 클러스터 배포용 yaml

cat <<EOT> kind-ingress.yaml

kind: Cluster

apiVersion: kind.x-k8s.io/v1alpha4

nodes:

- role: control-plane

kubeadmConfigPatches:

- |

kind: InitConfiguration

nodeRegistration:

kubeletExtraArgs:

node-labels: "ingress-ready=true"

extraPortMappings:

- containerPort: 80

hostPort: 80

protocol: TCP

- containerPort: 443

hostPort: 443

protocol: TCP

- containerPort: 30000

hostPort: 30000

EOT

(⎈|N/A:N/A) root@kind:~# kind create cluster --config kind-ingress.yaml --name myk8s

Creating cluster "myk8s" ...

✓ Ensuring node image (kindest/node:v1.31.0) 🖼

✓ Preparing nodes 📦

✓ Writing configuration 📜

✓ Starting control-plane 🕹️

✓ Installing CNI 🔌

✓ Installing StorageClass 💾

(⎈|kind-myk8s:N/A) root@kind:~# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

2dd75c82d061 kindest/node:v1.31.0 "/usr/local/bin/entr…" 56 seconds ago Up 52 seconds 0.0.0.0:80->80/tcp, 0.0.0.0:443->443/tcp, 0.0.0.0:30000->30000/tcp, 127.0.0.1:36839->6443/tcp myk8s-control-plane

# 클러스터 생성시 사용한 yaml에서 포트매핑한 것과 같은 것을 확인

(⎈|kind-myk8s:N/A) root@kind:~# docker port myk8s-control-plane

80/tcp -> 0.0.0.0:80

443/tcp -> 0.0.0.0:443

6443/tcp -> 127.0.0.1:36839

30000/tcp -> 0.0.0.0:30000

# 클러스터 배포 시 지정한 ingress-ready label 확인

(⎈|kind-myk8s:N/A) root@kind:~# kubectl get nodes myk8s-control-plane -o jsonpath={.metadata.labels.ingress-ready}

true

# ingress controller 설치

(⎈|kind-myk8s:N/A) root@kind:~# kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/main/deploy/static/provider/kind/deploy.yaml

(⎈|kind-myk8s:N/A) root@kind:~# kubectl get deploy,svc,ep ingress-nginx-controller -n ingress-nginx -owide

NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

deployment.apps/ingress-nginx-controller 1/1 1 1 83s controller registry.k8s.io/ingress-nginx/controller:v1.11.2@sha256:d5f8217feeac4887cb1ed21f27c2674e58be06bd8f5184cacea2a69abaf78dce app.kubernetes.io/component=controller,app.kubernetes.io/instance=ingress-nginx,app.kubernetes.io/name=ingress-nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/ingress-nginx-controller NodePort 10.96.114.249 <none> 80:30243/TCP,443:31344/TCP 85s app.kubernetes.io/component=controller,app.kubernetes.io/instance=ingress-nginx,app.kubernetes.io/name=ingress-nginx

NAME ENDPOINTS AGE

endpoints/ingress-nginx-controller 10.244.0.7:443,10.244.0.7:80 85s

# ingress controller pod ip

(⎈|kind-myk8s:N/A) root@kind:~# kubectl get po -owide -n ingress-nginx

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

ingress-nginx-admission-create-fvgjv 0/1 Completed 0 3m43s 10.244.0.5 myk8s-control-plane <none> <none>

ingress-nginx-admission-patch-c4rrw 0/1 Completed 0 3m43s 10.244.0.6 myk8s-control-plane <none> <none>

ingress-nginx-controller-6b8cfc8d84-d8m6g 1/1 Running 0 3m43s 10.244.0.7 myk8s-control-plane <none> <none>

# 컨프롤플레인에서 ingress controller pod ip가 걸린 iptables 확인

(⎈|kind-myk8s:N/A) root@kind:~# docker exec -it myk8s-control-plane iptables -t nat -L -n -v | grep '10.244.0.7'

0 0 DNAT 6 -- * * 0.0.0.0/0 0.0.0.0/0 tcp dpt:80 to:10.244.0.7:80

0 0 DNAT 6 -- * * 0.0.0.0/0 0.0.0.0/0 tcp dpt:443 to:10.244.0.7:443

테스트 배포

ip

# foo, bar를 분기로 나눠지는 샘플

(⎈|kind-myk8s:N/A) root@kind:~# kubectl apply -f https://kind.sigs.k8s.io/examples/ingress/usage.yaml

pod/foo-app created

service/foo-service created

pod/bar-app created

service/bar-service created

ingress.networking.k8s.io/example-ingress created

(⎈|kind-myk8s:N/A) root@kind:~# kubectl get ingress,pod -owide

NAME CLASS HOSTS ADDRESS PORTS AGE

ingress.networking.k8s.io/example-ingress <none> * localhost 80 37s

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/bar-app 1/1 Running 0 37s 10.244.0.9 myk8s-control-plane <none> <none>

pod/foo-app 1/1 Running 0 37s 10.244.0.8 myk8s-control-plane <none> <none>

(⎈|kind-myk8s:N/A) root@kind:~# kubectl get svc,ep foo-service bar-service

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/foo-service ClusterIP 10.96.179.35 <none> 8080/TCP 41s

service/bar-service ClusterIP 10.96.50.31 <none> 8080/TCP 41s

NAME ENDPOINTS AGE

endpoints/foo-service 10.244.0.8:8080 41s

endpoints/bar-service 10.244.0.9:8080 41s

# path에 따라 각 서비스가 매핑됨

(⎈|kind-myk8s:N/A) root@kind:~# kubectl describe ingress example-ingress

Name: example-ingress

Labels: <none>

Namespace: default

Address: localhost

Ingress Class: <none>

Default backend: <default>

Rules:

Host Path Backends

---- ---- --------

*

/foo(/|$)(.*) foo-service:8080 (10.244.0.8:8080)

/bar(/|$)(.*) bar-service:8080 (10.244.0.9:8080)

Annotations: nginx.ingress.kubernetes.io/rewrite-target: /$2

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Sync 24s (x2 over 46s) nginx-ingress-controller Scheduled for sync

# 터미널1에서 로그 모니터링을 켜두고

(⎈|kind-myk8s:N/A) root@kind:~# kubectl logs -n ingress-nginx deploy/ingress-nginx-controller -f

...

192.168.50.1 - - [07/Sep/2024:20:56:15 +0000] "GET /foo/hostname HTTP/1.1" 200 7 "-" "curl/8.4.0" 88 0.003 [default-foo-service-8080] [] 10.244.0.8:8080 7 0.002 200 7d3935849d0f386b3adfb37f74a60ae2

192.168.50.1 - - [07/Sep/2024:20:56:25 +0000] "GET /bar/hostname HTTP/1.1" 200 7 "-" "curl/8.4.0" 88 0.002 [default-bar-service-8080] [] 10.244.0.9:8080 7 0.002 200 61ae6d42666949f4f73b0c846cc3410f

# 터미널2에서 아래와 같이 수행

❯ curl 192.168.50.10/foo/hostname

foo-app% 05:56:15

❯ curl 192.168.50.10/bar/hostname

bar-app%

domain

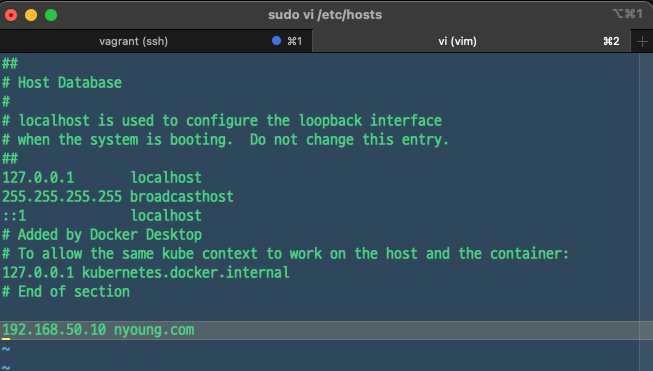

host에서 도메인을 알아야하기 때문에 먼저 /etc/hosts 파일을 수정했다. enp0s8 ip domain 을 매핑하여 작성

(⎈|kind-myk8s:N/A) root@kind:~# kubectl get ingress example-ingress -o yaml > ingress.yaml

(⎈|kind-myk8s:N/A) root@kind:~# vi ingress.yaml

...

spec:

rules:

- host: nyoung.com

http:

paths:

- path: /foo

backend:

serviceName: foo-service

servicePort: 8080

- path: /bar

backend:

serviceName: bar-service

servicePort: 8080

...

# 호스트에서 실행

(⎈|kind-myk8s:N/A) root@kind:~# kubectl apply -f ingress.yaml

❯ curl nyoung.com/foo/hostname

foo-app%

❯ curl nyoung.com/bar/hostname

bar-app%

참고